Nginx 原生日志 192.168.189.1 - - [19/Apr/2019:11:15:09 +0800] "GET /favicon.ico HTTP/1.1" 404 548 "http://192.168.189.84/" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.121 Safari/537.36" 访问IP地址 192.168.189.1 访问时间 [19/Apr/2019:11:15:09 +0800] 请求方式(GET/POST) GET 请求URL /favicon.ico HTTP/1.1 状态码 404 响应body 大小 548 Referer 来自那个链接 "http://192.168.189.84/" User Agent 最后面就是User Agent 架构说明: 192.168.189.83 Kibana Elasticsearch 192.168.189.84 Logstash、Nginx 利用Kibana的 Grok 学习 Logstash 正则提取日志 1、grok使用(?<xxx>提取内容) 来提取xxx字段 提取客户端IP:(?<clientip>[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3})提取时间:\[(?<requesttime>[^ ]+ \+[0-9]+]\]

提取,日期、IP ,请求类型 链接 (?<clientip>[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}) - - \[(?<requesttime>[^ ]+ \+[0-9]+)\] "(?<requesttype>[A-Z]+) (?<requesturl>[^ ]+) HTTP/\d.\d"

(?<clientip>[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}) - - \[(?<requesttime>[^ ]+ \+[0-9]+)\] "(?<requesttype>[A-Z]+) (?<requesturl>[^ ]+) HTTP/\d.\d" (?<status>[0-9]+) (?<bodysize>[0-9]+)

提取Referer User Agent (?<clientip>[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}) - - \[(?<requesttime>[^ ]+ \+[0-9]+)\] "(?<requesttype>[A-Z]+) (?<requesturl>[^ ]+) HTTP/\d.\d" (?<status>[0-9]+) (?<bodysize>[0-9]+) "(?<url>[^"]+)" "(?<ua>[^"]+)"

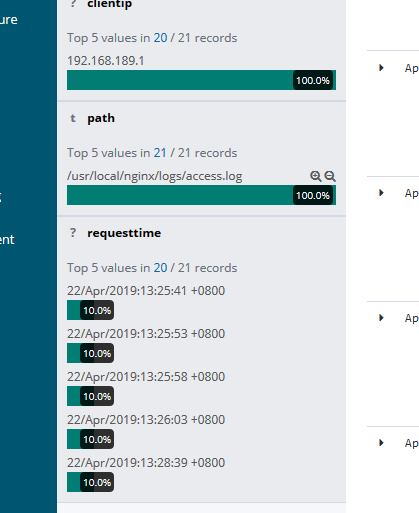

+++++++++++++++++++++ 修改logstash 配置文件,添加filter vim /usr/local/logstash-6.6.0/config/logstash.conf input{ file { path=> "/usr/local/nginx/logs/access.log" } } filter { grok { match => { "message"=> '(?<clientip>[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}) - - \[(?<requesttime>[^ ]+ \+[0-9]+)\] "(?<requesttype>[A-Z]+) (?<requesturl>[^ ]+) HTTP/\d.\d" (?<status>[0-9]+) (?<bodysize>[0-9]+) "(?<url>[^"]+)" "(?<ua>[^"]+)"' } } } output{ elasticsearch { hosts => ["http://192.168.189.83:9200"] } } 重启logstash ps -ef |grep logstash kill 1662 /usr/local/logstash-6.6.0/bin/logstash -f /usr/local/logstash-6.6.0/config/logstash.conf IE 打开 http://192.168.189.84 多刷新几次

+++++++++++ 如果出现正则解析失败,就不发送到ES input { file { path=>"/usr/local/nginx/logs/access.log" } } filter { grok { match => { "message"=>'(?<clientIP>[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}) - - \[(?<requesttime>[^ ]+ \+[0-9]+)\] "(?<requesttype>[A-Z]+)(?<requesturl>[^ ]+) HTTP/\d.\d" (?<status>[0-9]+) (?<bodysize>[0-9]+) "[^"]+" "(?<ua>[^"]+)"' } } } output { #Logstash正则提取失败就不输出到elasticsearch if "_grokparsefailure" not in [tags] and "_dateparsefailure" not in [tags] { elasticsearch { hosts=>["http://192.168.189.83:9200"] } } }