一、实验环境: 5台 Centos 7.4 最小化环境,内存不低于1G。 软件:neo4j-enterprise-3.4.1-unix.tar haproxy-1.7.9.tar.gz keepalived 1.4.4 二、IP规划:三、基础配置: 3.1、下载 neo4j3.4.1 企业版 并上传到neo4j01-03 /opt https://pan.baidu.com/s/1mAIzZa_s5JxYb2iSOUxTzw 提取码: i33m

3.2、安装依赖包:(5台服务器都要安装) yum install gcc gcc-c++ openssl-devel popt-devel vim wget lrzsz -y 每个neo4j节点安装java-1.8.0-openjdk yum install java-1.8.0-openjdk -y 3.3、关闭防火墙、selinux。 systemctl disable firewalld systemctl stop firewalld setenforce 0 sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config 四、安装neo4j (neo4j01-03)操作 cd /opt tar zxvf neo4j-enterprise-3.4.1-unix.tar.gz -C /usr/local/ cd cd /usr/local/neo4j-enterprise-3.4.1/conf vim neo4j.conf

启动neo4j cd /usr/local/neo4j-enterprise-3.4.1/bin

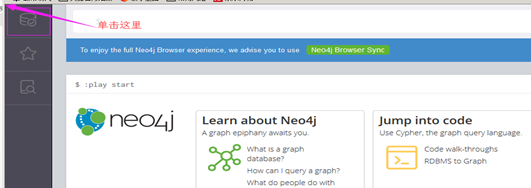

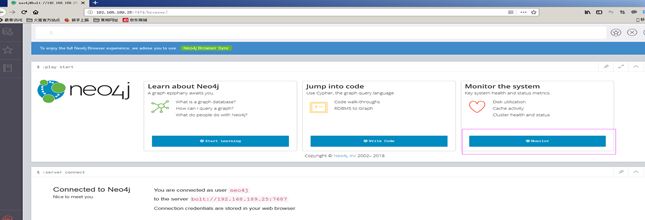

修改neo4j的默认密码 neo4j的默认用户和密码,都是neo4j WEB界面登录 IE 登录:

(neo4j02)

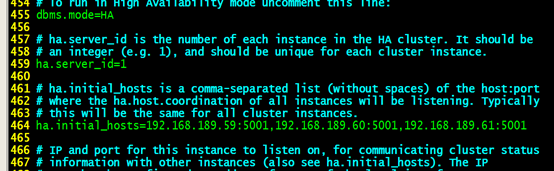

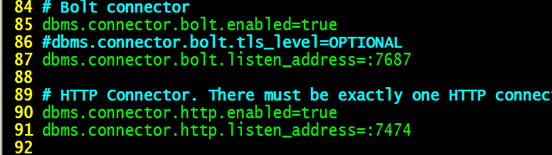

其他两台 neo4j01 neo4j03 也修改配置文件,使其可以在任意位置登录。 修改配置完成后,记得需要重启生效。【操作与WEB界面修改密码类似】 停止: 进入bin目录执行./neo4j stop 查看图数据库状态: 进入bin目录执行./neo4j status 客户端访问: http://服务器ip地址:7474/browser/ 五、配置neo4j高可用 先确保 neo4j01-03 节点的neo4j,正常启动。 修改配置文件:neo4j01 上操作 cd cd /usr/local/neo4j-enterprise-3.4.1/conf vim neo4j.conf

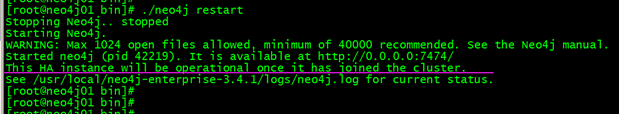

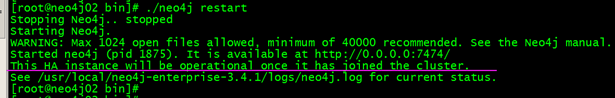

其他两个节点,neo4j02 neo4j03 配置文件,ID不同,neo4j02 ha.server_id=2 neo4j03 ha.server_id=3 其他配置都一样。 保存退出,记得要重启服务。 cd /usr/local/neo4j-enterprise-3.4.1/bin ./neo4j restart

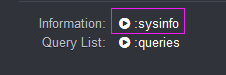

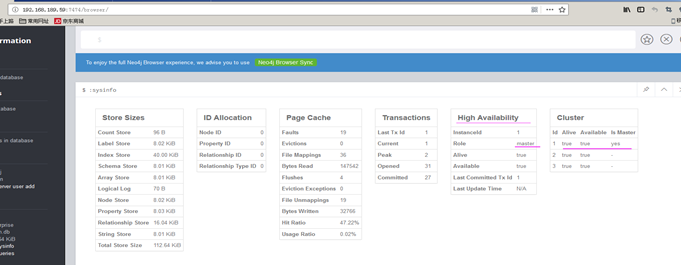

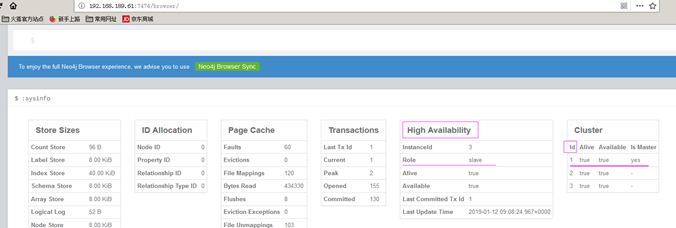

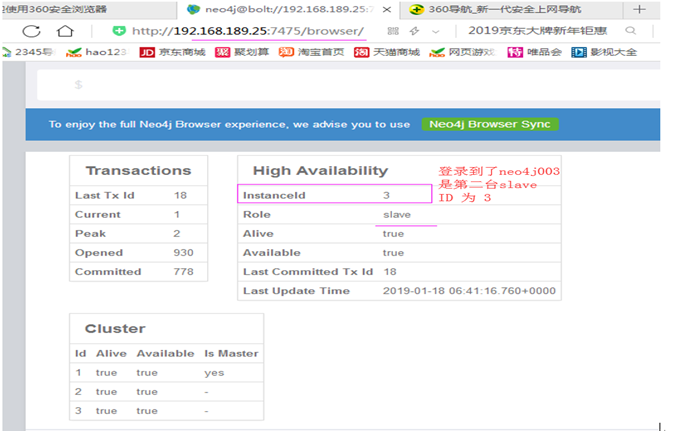

六、查看 IE登录,三台neo4j 节点

查看端口:

查看进程:

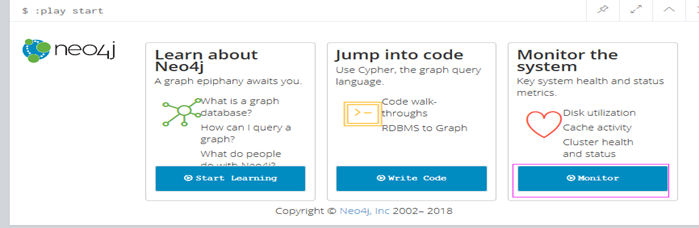

七、测试,数据同步。 利用neo4j的自带实例来测试。在 neo4j01 的IE页面操作。

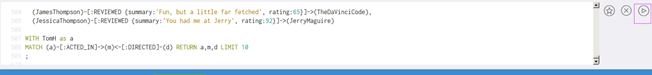

在命令行窗口,执行如下查询语句:

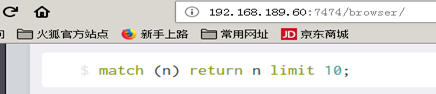

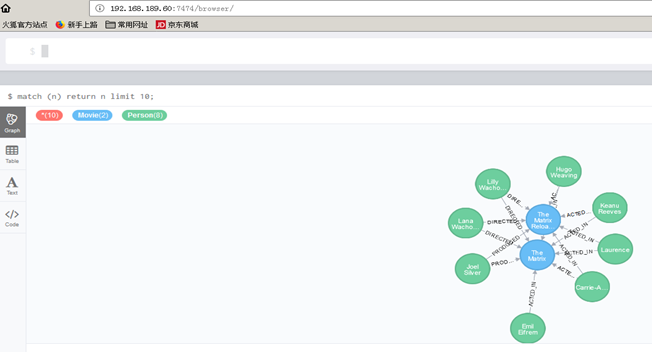

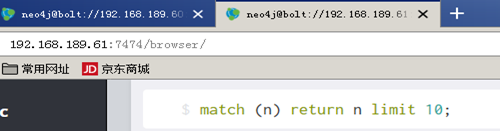

++++++++++++++++++++++++++++++++++++++++++++++ 接下来,在neo4j02 neo4j03 的IE 界面,命令行窗口执行:

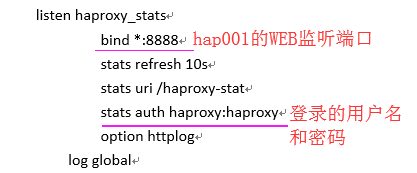

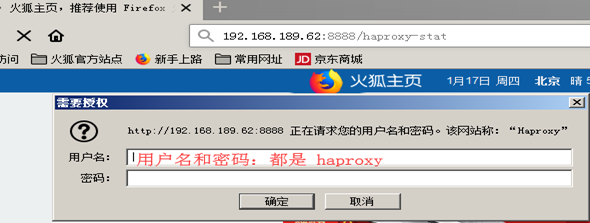

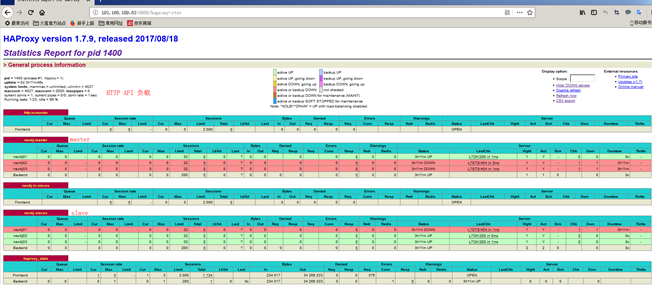

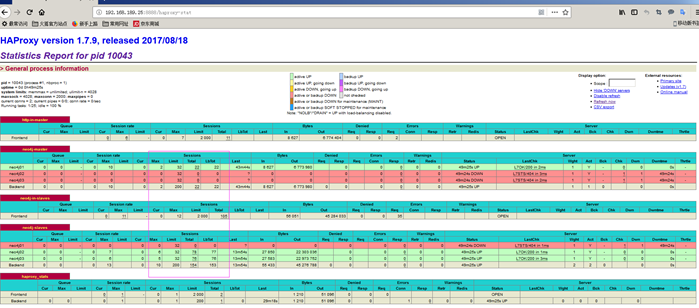

neo4j 3.4.1高可用集群配置完成。 八、安装haproxy 对neo4j做代理。 8.1、安装 在hap001 hap002 两个节点上都要安装 安装依赖包:yum install gcc gcc-c++ openssl-devel popt-devel -y cd /opt wget http://pkgs.fedoraproject.org/repo/pkgs/haproxy/haproxy-1.7.9.tar.gz/sha512/d1ed791bc9607dbeabcfc6a1853cf258e28b3a079923b63d3bf97504dd59e64a5f5f44f9da968c23c12b4279e8d45ff3bd39418942ca6f00d9d548c9a0ccfd73/haproxy-1.7.9.tar.gz 解压文件、编译安装: tar zxvf haproxy-1.7.9.tar.gz && cd haproxy-1.7.9/ uname -r make TARGET=linux310 ARCH=x86_64 PREFIX=/usr/local/haproxy make install PREFIX=/usr/local/haproxy cd /usr/local/haproxy/ mkdir conf && cd conf/ vim haproxy.cfg ## 为HTTP API 配置HAProxy global log 127.0.0.1 local0 notice daemon nbproc 1 pidfile /usr/local/haproxy/haproxy.pid maxconn 2000 defaults mode http timeout connect 5s timeout server 5s timeout client 5s frontend http-in-master bind *:7474 default_backend neo4j-master backend neo4j-master option httpchk GET /db/manage/server/ha/master server neo4j01 192.168.189.59:7474 maxconn 32 check server neo4j02 192.168.189.60:7474 maxconn 32 check server neo4j03 192.168.189.61:7474 maxconn 32 check frontend neo4j-in-slaves bind *:7475 default_backend neo4j-slaves backend neo4j-slaves balance roundrobin option httpchk GET /db/manage/server/ha/slave server neo4j01 192.168.189.59:7474 maxconn 32 check server neo4j02 192.168.189.60:7474 maxconn 32 check server neo4j03 192.168.189.61:7474 maxconn 32 check listen haproxy_stats mode http bind *:8888 stats refresh 10s stats uri /haproxy-stat stats auth haproxy:haproxy option httplog log global 保存退出。 ++++++++++++++++++++++++++++++++++++++ vim haproxy-bolt.cfg ## 为bolt 协议配置HAProxy global log 127.0.0.1 local0 notice daemon nbproc 1 defaults mode tcp timeout connect 30s timeout server 2h timeout client 2h frontend neo4j-bolt-write bind *:7687 default_backend bolt-master backend bolt-master option httpchk HEAD /db/manage/server/ha/master HTTP/1.0 server neo4j01 192.168.189.59:7687 check port 7474 server neo4j02 192.168.189.60:7687 check port 7474 server neo4j03 192.168.189.61:7687 check port 7474 frontend neo4j-bolt-read bind *:7688 default_backend bolt-slaves backend bolt-slaves balance roundrobin option httpchk HEAD /db/manage/server/ha/slave HTTP/1.0 server neo4j01 192.168.189.59:7687 check port 7474 server neo4j02 192.168.189.60:7687 check port 7474 server neo4j03 192.168.189.61:7687 check port 7474 listen haproxy_bolt-stats mode http bind *:8888 stats refresh 10s stats uri /haproxy-stat stats auth haproxy:haproxy option httplog log global 保存退出。 +++++++++++++++++++++++++++++++ 创建haproxy 命令的软链接: ln -s /usr/local/haproxy/sbin/haproxy /usr/local/sbin/haproxy 启动haproxy haproxy -f /usr/local/haproxy/conf/haproxy.cfg -f /usr/local/haproxy/conf/haproxy-bolt.cfg

8.2、配置haproxy 日志:hap002 也需要配置 yum -y install rsyslog 修改 /etc/sysconfig/rsyslog 文件 SYSLOGD_OPTIONS="-r -m 0 -c 2"

systemctl restart rsyslog.service 8.3、拷贝 haproxy 配置文件到hap002 /usr/local/haproxy/conf scp -r /usr/local/haproxy/conf/* root@192.168.189.63:/usr/local/haproxy/conf/

创建haproxy 命令的软链接:(在hap002 上面操作) ln -s /usr/local/haproxy/sbin/haproxy /usr/local/sbin/haproxy 启动haproxy haproxy -f /usr/local/haproxy/conf/haproxy.cfg -f /usr/local/haproxy/conf/haproxy-bolt.cfg 8.3、配置haproxy启动由systemctl 启动 (先在hap001 上面操作。) vim /usr/lib/systemd/system/haproxy.service [Unit] Description=HAProxy After=network.target [Service] User=root Type=forking ExecStart=/usr/local/haproxy/sbin/haproxy -f /usr/local/haproxy/conf/haproxy.cfg -f /usr/local/haproxy/conf/haproxy-bolt.cfg ExecStop=/usr/bin/kill `/usr/bin/cat /usr/local/haproxy/haproxy.pid` ExecRestart=haproxy -f /usr/local/haproxy/conf/haproxy.cfg -f /usr/local/haproxy/conf/haproxy-bolt.cfg -st `cat /usr/local/haproxy/haproxy.pid` [Install] WantedBy=multi-user.target 保存退出。 把这个文件(haproxy.service)拷贝到 hap002 相应的位置。 以下三条命令,在 hap001 hap002 上面都要执行 systemctl daemon-reload systemctl restart haproxy systemctl enable haproxy 九、安装keepalived (hap001 hap002 都安装) cd /opt wget http://www.keepalived.org/software/keepalived-1.4.4.tar.gz tar zxvf keepalived-1.4.4.tar.gz cd keepalived-1.4.4/ ./configure --prefix=/usr/local/keepalived/ make && make install mkdir -p /etc/keepalived cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/ cp /usr/local/keepalived/sbin/keepalived /usr/sbin/ cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/ 修改配置文件: vim /etc/keepalived/keepalived.conf 添加以下内容: ! Configuration File for keepalived global_defs { router_id haproxy-router notification_email { 331758730@qq.com } notification_email_from 331758730@qq.com smtp_server stmp.qq.com smtp_connect_timeout 30 } vrrp_script chk_haproxy { script "/usr/local/haproxy/check_ha.sh" interval 2 fall 2 rise 2 } vrrp_instance haproxy_1 { state MASTER interface ens33 virtual_router_id 238 priority 100 advert_int 1 #nopreempt authentication { auth_type PASS auth_pass haproxy } track_script { chk_haproxy } virtual_ipaddress { 192.168.189.25/24 } notify /usr/local/haproxy/notify_ha.sh } 保存退出。把这份配置文件拷贝到hap002 ,修改两个地方: state MASTER 改成 state BACKUP priority 100 改成 priority 98 其他不变。 检测脚本: vim /usr/local/haproxy/ check_ha.sh #!/bin/bash LOGFILE="/var/log/keepalived-haproxy-state.log" date >> $LOGFILE if [ `ps -C haproxy --no-header |wc -l` -eq 0 ];then echo "fail: check_haproxy status" >> $LOGFILE exit 1 else echo "success: check_haproxy status" >> $LOGFILE exit 0 fi +++++++++++++++++++++++++++++++++++++ 通知脚本: notify_ha.sh vim /usr/local/haproxy/notify_ha.sh #!/bin/bash LOGFILE="/var/log/keepalived-haproxy-state.log" echo "Being Master ..." >> $LOGFILE +++++++++++++++++++++++++++++++ chmod +x /usr/local/haproxy/check_ha.sh notify_ha.sh +++++++++++++++++++++ 两个脚本文件,也要拷贝到hap002 相应的位置。 scp -r /usr/local/haproxy/check_ha.sh notify_ha.sh root@192.168.189.63:/usr/local/haproxy/ systemctl enable keepalived systemctl start keepalived 查看VIP:在hap001 上 操作

测试VIP是否能正常飘移:在hap001 上面操作。 先停止hap001 的 haproxy :

在 hap002 上面查看 VIP是否有正常飘移过来。

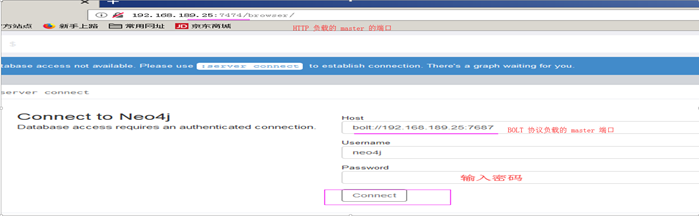

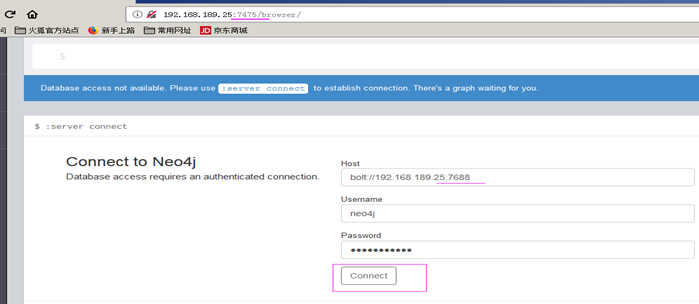

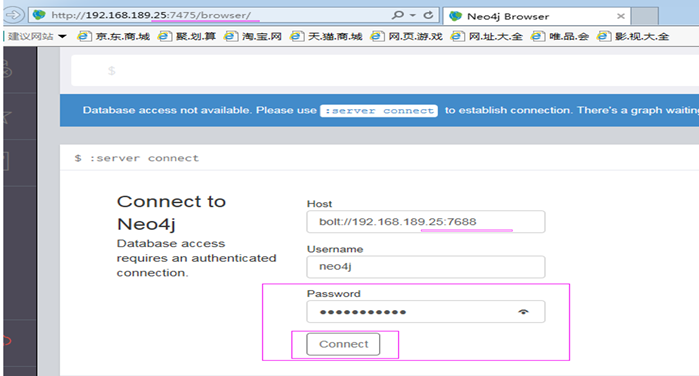

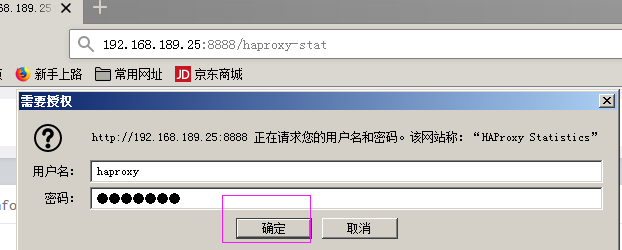

十、测试: 10.1、IE登录 VIP(192.168.189.25) 测试

数据查询:

++++++ 登录Slave 端口 (HTTP:7475 BOLT:7488)

+++++++++++ 从另外的虚拟机上登录:slave端口

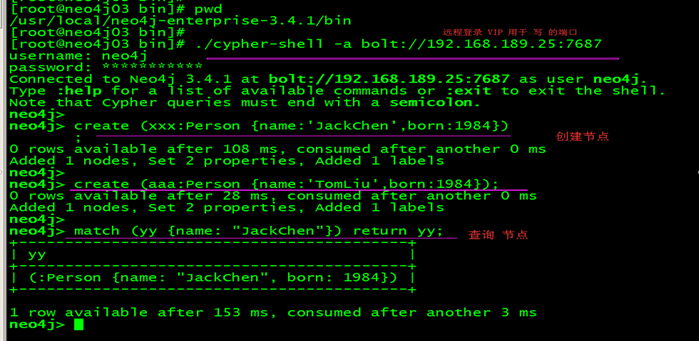

10.2、命令行,远程登录测试: 在neo4j001-003 任选一台,也可以在选另外的一台,装有neo4j的主机进行测试。

+++++++++++++++ END+++++++++++++++++++++