前面我们实验了单台redis作为收集日志的缓存层,但是单台redis存在一个问题,就是单点故障,虽然可以做持久化处理,但是假如服务器坏掉或者修复时间未知的情况下还是有可能会丢数据。redis集群方案有哨兵和集群,但可惜的是filebeat和logstash都不支持这两种方案。 不过利用我们以前所学的知识完全可以解决这个问题,虽然不是彻底解决,但是已经很好了 方案如下: 1.使用nginx+keepalived反向代理负载均衡到后面的多台redis 2.考虑到redis故障切换中数据一致性的问题,所以最好我们只使用2台redis,并且只工作一台,另外一台作为backup,只有第一台坏掉后,第二台才会工作。 3.filebeat的oputut的redis地址为keepalived的虚拟IP 4.logstash可以启动多个节点来加速读取redis的数据 5.后端可以采用多台es集群来做支撑

下面的拓扑图为本次实验环境:

实验环境: 192.168.189.118 ---->nginx + filebeat 客户端 logstash kibana 192.168.189.119----->nginx + keepalived + redis 192.168.189.120----->nginx + keepalived + redis 硬件资源有限,实际环境中,logstash kibana不会在客户端上面,多台redis 也是分开的 准备环境: 一、安装nginx keepalived 189.119 189.120 上面操作,步骤一样,keepalived 配置稍微不同。 yum install nginx keepalived -y cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak 192.168.189.119----->keepalived配置文件: vim /etc/keepalived/keepalived.conf global_defs { router_id lb01 } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 50 priority 150 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.189.133 } } ++++++++++++ 192.168.189.120----->keepalived配置文件: vim /etc/keepalived/keepalived.conf global_defs { router_id lb02 } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 50 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.189.133 } } 启动189.119 189.120 上面的keepalived systemctl start keepalived 在189.119 上面查看有没有VIP 192.168.189.133

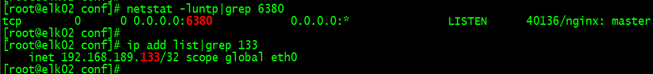

systemctl enable keepalived ++++++++++++++++++++++++++++ 二、Redis 安装 189.119 189.120 都安装redis,操作步骤一样。 目录规划: redis:下载目录 /data/soft 安装目录: /usr/local/redis_cluster/redis_{port}/{conf,logs,pid} 数据目录: /data/redis_cluster/redis_{port}/redis_{port}.rdb ##持久化文件运维脚本: /root/scripts/redis_shell.sh mkdir -p /data/soft mkdir -p /usr/local/redis_cluster/redis_6379/{conf,logs,pid} mkdir -p /data/redis_cluster/redis_6379 cd /data/soft/ wget http://download.redis.io/releases/redis-5.0.8.tar.gz tar zxf redis-5.0.8.tar.gz -C /usr/local/redis_cluster/ cd /usr/local/redis_cluster/ ln -s redis-5.0.8 redis cd redis make make install Redis 配置文件: cd /usr/local/redis_cluster/redis/utils ./install_server.sh ##这个脚本,可以生成配置文件,官方最全的配置文件 简易redis配置文件: cd /usr/local/redis_cluster/redis_6379/conf vim redis_6379.conf # 以守护进程模式启动 daemonize yes # 绑定的主机地址 bind 0.0.0.0 # 监听端口 port 6379 # pid 文件和log文件的保存地址 pidfile /usr/local/redis_cluster/redis_6379/pid/redis_6379.pid logfile /usr/local/redis_cluster/redis_6379/logs/redis_6379.log # 设置数据库的数量,默认数据库为0 databases 16 # 指定本地持久化文件的文件名,默认是dump.rdb dbfilename redis_6379.rdb # 本地数据库的目录 dir /data/redis_cluster/redis_6379 =============================== 189.119 189.120 启动redis redis-server /usr/local/redis_cluster/redis_6379/conf/redis_6379.conf ++++++++++++++++++++ 三、配置189.119 189.120 nginx,两个配置都一样。 vim /etc/nginx/nginx.conf 在http 模块外面添加如下内容: stream { upstream redis { server 192.168.189.119:6379 max_fails=2 fail_timeout=10s; server 192.168.189.120:6379 max_fails=2 fail_timeout=10s backup; } server { listen 6380; proxy_connect_timeout 1s; proxy_timeout 3s; proxy_pass redis; } } 保存退出。启动nginx systemctl start nginx

systemctl enable nginx 为了表示区分,我们把nginx 的监听端口改为了6380,当访问VIP 189.133的6380端口时,会自动转发到后端的服务器。 server 192.168.189.120:6379 max_fails=2 fail_timeout=10s backup; 表示,为备用的,当主服务器,不在线时,才启用这台服务器。 ++++++++++++++++++++++++++ 四、修改filebeat配置文件(192.168.189.118上面操作) vim /etc/filebeat/filebeat.yml filebeat.inputs: - type: log enabled: true paths: - /var/log/nginx/access.log json.keys_under_root: true json.overwrite_keys: true tags: ["access"] - type: log enabled: true paths: - /var/log/nginx/error.log tags: ["error"] ######### 写入到redis output.redis: hosts: ["192.168.189.133:6380"] keys: - key: "nginx_redis_acc" when.contains: tags: "access" - key: "nginx_redis_err" when.contains: tags: "error" 保存退出。重启filebeat tail -f /var/log/filebeat/filebeat

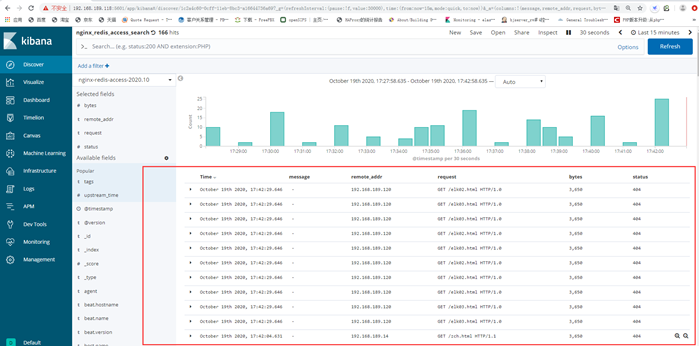

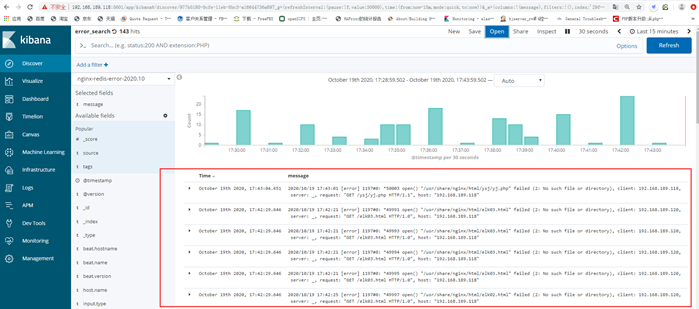

五、修改logstash配置文件(192.168.189.118上面操作) vim /etc/logstash/logstash.yml input { redis { host => "192.168.189.133" port => "6380" db => "0" key => "nginx_redis_acc" data_type => "list" } redis { host => "192.168.189.133" port => "6380" db => "0" key => "nginx_redis_err" data_type => "list" } } filter { mutate { convert => ["upstream_time", "float"] convert => ["request_time", "float"] } } output { #stdout {} if "access" in [tags] { elasticsearch { hosts => "http://192.168.189.118:9200" manage_template => false index => "nginx-redis-access-%{+yyyy.MM}" } } if "error" in [tags] { elasticsearch { hosts => "http://192.168.189.118:9200" manage_template => false index => "nginx-redis-error-%{+yyyy.MM}" } } } 保存退出,启动logstash,如果已有logstash,先停止,再启动。 logstash -f /etc/logstash/conf.d/redis.conf 六、测试 模拟一些192.168.189.118的nginx 访问日志 192.168.189.120 上面操作: ab -n 5 -c 5 http://192.168.189.118/elk02.html ab -n 5 -c 5 http://192.168.189.118/elk03.html 登录kibana 查看数据: