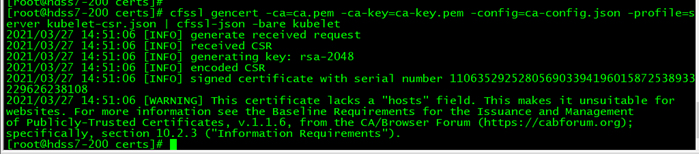

同样是部署在 10.4.7.21 10.4.7.22 首先还是签发证书,在 10.4.7.200 上面操作: 创建生成证书签名请求(csr)的JSON配置文件 cd /opt/certs vi kubelet-csr.json { "CN": "k8s-kubelet", "hosts": [ "127.0.0.1", "10.4.7.10", "10.4.7.21", "10.4.7.22", "10.4.7.23", "10.4.7.24", "10.4.7.25", "10.4.7.26", "10.4.7.27", "10.4.7.28" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "od", "OU": "ops" } ] } #### 尽量要把以后所有可能用到kubelet节点的 IP,都写在hosts段,以后若要添加kubelet节点就可以不用再重新签发证书。 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet

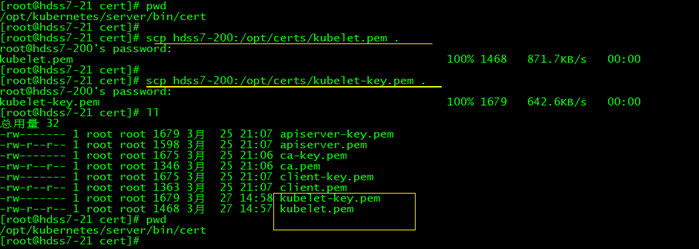

拷贝证书至各运算节点,并创建配置 10.4.7.21 104.7.22 上面都操作(拷贝证书到相应的目录): cd /opt/kubernetes/server/bin/cert scp hdss7-200:/opt/certs/kubelet.pem . scp hdss7-200:/opt/certs/kubelet-key.pem .

先在 10.4.7.21 上面操作: 给kubelet去创建一个 kubelet.kubeconfig的配置文件。(只需要一在台kubelet节点上创建即可) 创建配置,在/opt/kubernetes/server/bin/conf/ 下面,我们需要给kubelet 创建一个kube-config的配置文件,总共需要4步。 1、set-cluster cd /opt/kubernetes/server/bin/conf/ kubectl config set-cluster myk8s \ --certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \ --embed-certs=true \ --server=https://10.4.7.10:7443 \ --kubeconfig=kubelet.kubeconfig

2、set-credentials cd /opt/kubernetes/server/bin/conf/ kubectl config set-credentials k8s-node \ --client-certificate=/opt/kubernetes/server/bin/cert/client.pem \ --client-key=/opt/kubernetes/server/bin/cert/client-key.pem \ --embed-certs=true \ --kubeconfig=kubelet.kubeconfig

3、set-context cd /opt/kubernetes/server/bin/conf/ kubectl config set-context myk8s-context \ --cluster=myk8s \ --user=k8s-node \ --kubeconfig=kubelet.kubeconfig

4、use-context cd /opt/kubernetes/server/bin/conf/ kubectl config use-context myk8s-context --kubeconfig=kubelet.kubeconfig

创建k8s-node.yaml 配置文件 cd /opt/kubernetes/server/bin/conf/ vi k8s-node.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: k8s-node roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:node subjects: - apiGroup: rbac.authorization.k8s.io kind: User name: k8s-node

创建一个k8s用户,这个用户叫做k8s-node,然后我们要给这个k8s-node授予一个集群的权限,让这个k8s-node用户,具有成为运算节点的权限。 cd /opt/kubernetes/server/bin/conf/ kubectl create -f k8s-node.yaml

kubectl get clusterrolebinding k8s-node

kubectl get clusterrolebinding k8s-node -o yaml

####+++++++++++ 接下来在 10.4.7.22 上面操作:(直接拷贝10.4.7.21上面创建的kubelet.kubeconfig到10.4.7.22 /opt/kubernetes/server/bin/conf) cd /opt/kubernetes/server/bin/conf scp hdss7-21:/opt/kubernetes/server/bin/conf/kubelet.kubeconfig .

先前在10.4.7.21 上面创建的 集群角色绑定 资源,不用再创建了。 ##########+++++++ 准备 pause 基础镜像:(这个pause 镜像是谷歌开源的) 在 hdss7-200.host.com(10.4.7.200) 上面操作: docker pull kubernetes/pause

docker tag f9d5de079539 harbor.od.com/public/pause:latest docker push harbor.od.com/public/pause:latest

####+++++++ 创建 kubelet启动脚本 在 10.4.7.21 10.4.7.22 上面操作: cd /opt/kubernetes/server/bin vim /opt/kubernetes/server/bin/kubelet.sh #!/bin/sh ./kubelet \ --anonymous-auth=false \ --cgroup-driver systemd \ --cluster-dns 192.168.0.2 \ --cluster-domain cluster.local \ --runtime-cgroups=/systemd/system.slice \ --kubelet-cgroups=/systemd/system.slice \ --fail-swap-on="false" \ --client-ca-file ./cert/ca.pem \ --tls-cert-file ./cert/kubelet.pem \ --tls-private-key-file ./cert/kubelet-key.pem \ --hostname-override hdss7-21.host.com \ --image-gc-high-threshold 20 \ --image-gc-low-threshold 10 \ --kubeconfig ./conf/kubelet.kubeconfig \ --log-dir /data/logs/kubernetes/kube-kubelet \ --pod-infra-container-image harbor.od.com/public/pause:latest \ --root-dir /data/kubelet #########++++++++ mkdir -p /data/logs/kubernetes/kube-kubelet /data/kubelet chmod +x /opt/kubernetes/server/bin/kubelet.sh --hostname-override hdss7-22.host.com \ ##10.4.7.22 这里,改成hdss7-22.host.com,其他都一样。 ######++++++++ supervisor 配置: 先在 10.4.7.21 上面配置: cd /etc/supervisord.d/ vi /etc/supervisord.d/kube-kubelet.ini [program:kube-kubelet-7-21] command=/opt/kubernetes/server/bin/kubelet.sh numprocs=1 directory=/opt/kubernetes/server/bin autostart=true autorestart=true startsecs=30 startretries=3 exitcodes=0,2 stopsignal=QUIT stopwaitsecs=10 user=root redirect_stderr=true stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log stdout_logfile_maxbytes=64MB stdout_logfile_backups=4 stdout_capture_maxbytes=1MB stdout_events_enabled=false #########++++++++= supervisorctl update

######## 10.4.7.22 /etc/supervisord.d/kube-kubelet.ini [program:kube-kubelet-7-22]

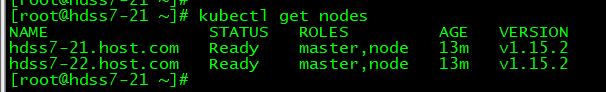

查看node 节点是不是加的集群里了 kubectl get nodes

发现 ROLES 是空的, 10.4.7.21 或者 10.4.7.22 上面任意一台上面操作。 kubectl label node hdss7-21.host.com node-role.kubernetes.io/master= kubectl label node hdss7-21.host.com node-role.kubernetes.io/node= kubectl label node hdss7-22.host.com node-role.kubernetes.io/master= kubectl label node hdss7-22.host.com node-role.kubernetes.io/node=